How to build AI-Generated Dashboards from User-defined Queries

AI-generated dashboards promise faster insight, but most implementations fail in real products. The issue is not model quality. It is architecture. Production-ready AI-generated dashboards must operate inside the analytics lifecycle, not outside it. That means intent detection rather than query generation, metadata rather than SQL, and reuse rather than constant creation. When AI respects security, business language, and existing workflows, dashboards become durable product assets. This approach shifts analytics from one-off answers to embedded decision support that scales across users, tenants, and use cases.

Executive Summary:

Key Takeaways:

- AI-generated dashboards succeed only when AI works on metadata, not raw queries.

- Security and permissions must remain unchanged for AI to be production-safe.

- Intent classification determines whether AI creates, edits, analyzes, or summarizes dashboards.

- Business language mapping improves accuracy more than model tuning.

- Reusing existing dashboards builds trust and prevents analytics sprawl.

Users expect analytics to work like everything else in your product: fast, contextual, and inside product workflows across industries. Traditional dashboards require setup and expertise. Most AI tools trade depth for speed and return temporary answers.

An AI-generated dashboard bridges this gap. It turns questions into persistent, reusable views that work within your existing analytics stack. This article explains how production teams build them, and why architectural choices determine whether AI dashboards scale or break.

What Is an AI-Generated Dashboard?

Most teams hear the term and picture a chat window that returns a chart. That view misses the point. The real shift is not the interface. It is the artifact that the system produces.

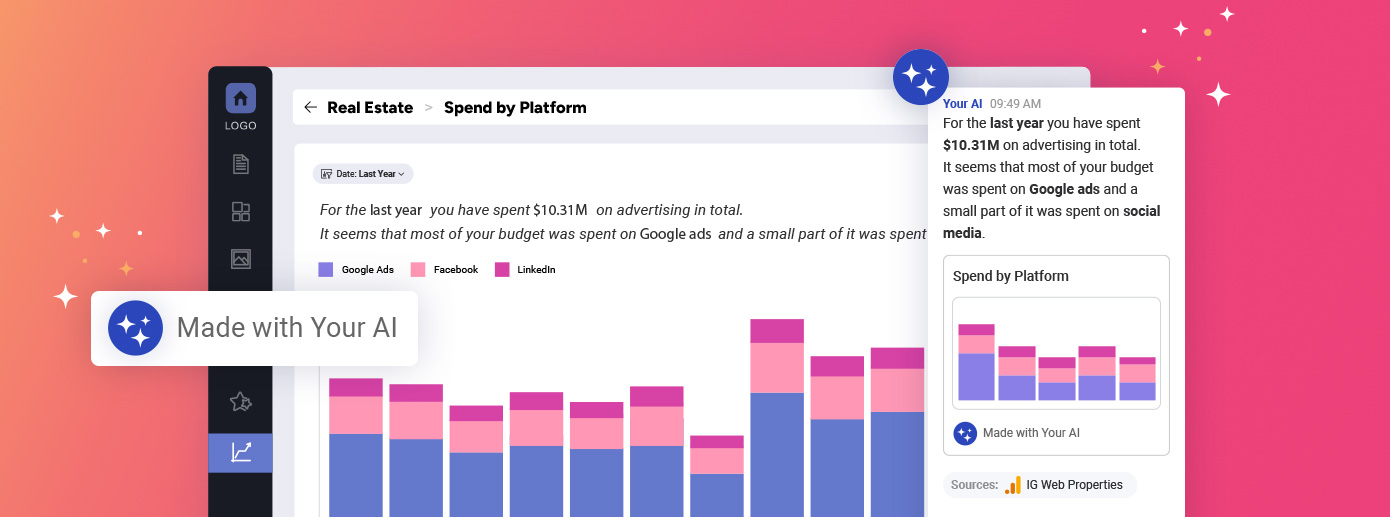

An AI-generated dashboard is a persistent dashboard created or modified from a user-defined query. The system interprets intent, selects data, chooses visualizations, and builds layout metadata. The output behaves like any other embedded dashboard in your product. It persists, supports filters and drill actions, and runs through your existing permissions and data models.

What separates an AI-generated dashboard from conversational output is how it holds up after creation.

- It persists beyond the initial question and can be saved and shared.

- It supports filters, drill actions, and visual edits like any standard dashboard.

- It runs through the same permissions and data models as manually built dashboards.

This definition matters because many tools stop at response generation. Understanding the difference sets the stage for how most AI dashboards are built today, and why that approach often breaks.

How AI-Generated Dashboards Are Commonly Built Today

Most AI dashboard implementations follow the same pattern:

- A user types a question in natural language.

- The model interprets the prompt and generates a query.

- The system runs that query against a database.

- The result renders as a chart or short response.

This approach often appears under labels like conversational analytics or augmented analytics optimizes for demo speed. It feels intuitive and shows value fast without changing their analytics stack.

The problem: the output exists only for the moment. Users can’t refine, save, or return to it later. Those limits become clear once teams try to move beyond demos into daily use.

Why Most AI-Generated Dashboards Break in Enterprise and SaaS Products

AI dashboards look impressive in demos because they optimize for fast answers. That same design breaks once products face real security, scale, and governance constraints.

Most failures start with data exposure. Many AI-generated dashboards rely on ad hoc queries created by a language model. This bypasses the security practices expected in production systems, as outlined in discussions around security with embedded analytics and broader concerns around security and analytics. Once permissions and auditability matter, trust erodes quickly.

Multi-tenant SaaS products face even stricter limits. A single prompt must respect tenant boundaries, role-based access, and data isolation. Chat-driven dashboards struggle here, as explained in analyses of multi-tenancy data in embedded analytics, because each request becomes a new surface for leakage.

User experience issues follow closely. Dashboards rendered in external tools or iframes pull users out of their workflow. Context switching reduces adoption and disrupts continuity, a common problem highlighted in comparisons of embedded analytics vs. iframes. Users stop seeing analytics as part of the product.

These failures share a root cause. AI operates outside the analytics lifecycle instead of inside it. That gap explains why teams rethink their approach and look for architectures where AI works within existing controls.

How to Secure AI-Generated Dashboards

Many teams believe AI needs direct access to data to be useful. That belief creates risk and slows adoption. Secure AI-generated dashboards follow a different path, one that keeps control inside the product.

The safest approach removes AI from the data layer entirely. Instead of querying databases, AI works with analytics metadata. This distinction is subtle, but it defines whether AI can operate in production systems.

AI Should Never Generate SQL

Some AI tools generate SQL dynamically. That design exposes databases to unpredictable behavior and permission gaps. Even well-tested models can produce queries that bypass rules.

A safer pattern: AI produces dashboard definitions using the analytics SDK model. These definitions describe structure and intent, not execution. Every dashboard still follows the same execution path as manually built ones.

Dashboards Must Run Through Existing Security Context

Products already enforce access rules. Replacing those rules for AI creates blind spots.

Secure AI Dashboards execute only against approved data sources. User context applies automatically, including tenant isolation and role-based access. AI cannot expand visibility beyond what the user already has.

This approach reflects how AI analytics should behave in enterprise products. Intelligence adapts to existing systems.

Control What AI Can Interpret

Not all data should be exposed to AI. Teams need the ability to restrict what AI can reference. Whitelisting tables, views, or fields limits scope without reducing usefulness.

Domain language also matters. Business terms can map to approved fields and definitions. This improves accuracy while keeping exploration bounded. Governance becomes part of the configuration, not an afterthought.

This model aligns with enterprise security expectations. AI remains helpful, but never autonomous.

From User Queries to AI-Generated Dashboards, Step by Step

Users rarely ask for charts. They ask questions that reflect decisions they need to make. The challenge is translating that intent into something the product can execute and reuse.

An effective AI-generated dashboard workflow treats natural language as a starting point, not an instruction. The system interprets the user’s intent, builds a structure, and then relies on the existing analytics runtime to handle the rest.

Step 1: Interpreting User Intent

The first task is understanding what the user is trying to do. A single input can signal very different actions depending on context.

Common intent categories include:

- Create a new dashboard

- Edit an existing dashboard

- Analyze a visualization

- Summarize a dashboard

For example, “Create a sales and orders dashboard” signals creation. “Add a total sales widget” signals modification. Correct intent classification matters because each path triggers a different workflow. Without this step, systems guess, and users lose trust fast.

Step 2: Generating Dashboard Metadata

Once intent is clear, the system builds the dashboard definition at the metadata level, not the query level.

The AI defines:

- Fields and measures

- Aggregations

- Visualization types

- Layout rules

For example, “Add a sales by country treemap” results in a new widget definition. The metadata describes how that widget should look and behave. No data runs yet. This separation allows AI-generated dashboards to remain predictable and auditable.

Step 3: Executing Through the Analytics Runtime

After metadata is ready, execution begins. The dashboard renders through the existing embedded analytics pipeline used by the product today.

At this stage, security and governance take over. Queries run only against approved data sources. Filters, row-level rules, and user context apply automatically. The AI does not bypass any checks because it never executes queries itself.

The output behaves like any other dashboard in the system. Users can drill, filter, and interact as expected.

Step 4: Persisting and Reusing Dashboards

The final step turns output into an asset. The dashboard can be saved, shared, and revisited later.

This matters in real workflows. A user might create a dashboard during analysis, then ask, “Summarize this dashboard” before a leadership meeting. The same dashboard supports both exploration and communication. Over time, AI-generated dashboards become part of the product’s analytics layer, not disposable answers.

AI-Assisted Dashboard Editing and Evolution

Dashboards rarely stay final. Teams adjust metrics, add context, and reframe views as questions change. Most tools treat these changes as rebuilds, which adds friction and slows adoption.

AI changes that pattern when it supports iteration instead of replacement. Users adjust what exists rather than starting from scratch.

Editing Existing Dashboards with Natural Language

Once a dashboard exists, most changes are incremental. Users do not want to open an editor or understand layout rules. They want to describe the change they need.

Common edit requests include:

- “Add a total sales widget”

- “Add sales by country treemap”

- “Add a global filter by region”

Each request updates the existing dashboard metadata. Widgets appear in context, layouts adjust automatically, and permissions remain unchanged. This approach keeps dashboards stable while allowing fast iteration.

Using AI for Analysis, Not Creation

Creation gets attention, but analysis delivers value. Teams often need explanations more than new charts.

AI can analyze a single visualization or an entire dashboard. A user might ask, “Summarize this dashboard” before a leadership review. The system inspects existing widgets and produces a clear narrative based on current data.

This avoids rework. The dashboard itself becomes the source for explanation and discussion.

From Dashboards to Decision Narratives

Dashboards often support decisions beyond the product. Executives need summaries, not interfaces.

AI helps bridge that gap. A dashboard created for operations can generate a short narrative for management. That summary can live within the product or be moved to an email or report.

Teams build once, then adapt outputs as needs change. This reduces duplication and keeps analytics aligned with real decisions.

How Domain-Specific Language and Business Context Improve AI-Generated Dashboards

A model can follow security rules and still return the wrong output. This happens when the user’s language does not match the language of your data. Accuracy depends on how well you map business terms to data fields.

Why Generic Language Breaks AI Dashboards

Users speak in business terms, not schema labels. They ask for “revenue,” “orders,” or “active accounts.” Your database may store different names and definitions.

This gap creates ambiguity. The system can pick the wrong field or metric. AI-generated dashboards can look inconsistent, even when permissions are correct. You fix this by teaching the system your vocabulary.

Mapping Business Terms to Data Fields

You can align language with a simple alias layer. You define what a term means in your business, then map it to a specific field.

For example, your team might say “chop chop” instead of “order ID.” Without that mapping the system guesses. With it, the system behaves predictably.

Here is a practical setup flow you can implement.

- List the business terms users actually say in tickets and calls.

- Map each term to one field, not a group of fields.

- Add a short description that clarifies meaning and usage.

- Store mappings in a config file or metadata service.

- Load mappings during AI initialization for that tenant or workspace.

- Log misses so you can expand the vocabulary over time.

This approach reduces guesswork and improves repeatability. It also makes reviews easier because mappings stay explicit.

Restricting Scope With Whitelisting

Vocabulary helps the system choose the right thing. Scope control prevents it from choosing the wrong thing.

Whitelisting limits what AI can reference. You can restrict access to specific tables, views, or subject areas. This reduces accidental exploration and improves response quality. AI-generated dashboards then stay consistent across users and tenants.

Reusing Existing Dashboards with AI and Vector Search

Teams quickly notice a pattern once AI enters analytics: every question creates something new. Over time, dashboards multiply, answers diverge, and trust drops.

This problem does not come from poor models. It comes from treating every question as a creation request. AI-generated dashboards scale only when reuse becomes the default.

Why Generating New Dashboards Every Time Fails

Creating a new dashboard for every question feels helpful at first. It solves the immediate request and looks productive. Over time, it creates noise.

Multiple dashboards answer the same question in slightly different ways. Teams stop knowing which one is correct. Users lose confidence and revert to manual checks. The solution: favor known, trusted assets over constant regeneration.

Embedding Meaning Into Dashboards with Metadata

Dashboards already contain structure. Titles, widgets, filters, and layouts all express intent. That intent becomes searchable once it is captured as metadata.

Each dashboard can store descriptive context. This includes what the dashboard answers, which questions it supports, and how it is used. That metadata lives alongside the dashboard definition and updates when dashboards change. AI-generated dashboards then become discoverable assets, not isolated outputs.

Finding Dashboards Instead of Rebuilding Them

Here is how this works in practice.

A team has an existing dashboard called “Orders and Sales Overview.” It includes total orders, total sales, and sales by country. The dashboard also stores metadata describing common questions it answers.

A user asks, “What are total orders?” Instead of creating something new, the system searches existing dashboards using vector similarity. It compares the question to stored dashboard metadata and returns the closest match with a confidence score.

If the confidence is high, the system loads the existing dashboard or a specific widget from it. The user gets a trusted result immediately. No duplication occurs. AI-generated dashboards now behave like a retrieval layer over validated analytics, not a factory for endless variants.

Reveal as the AI-Generated Dashboard Platform

Many teams think the hardest decision is model selection. In practice, the real challenge is architectural. AI-generated dashboards only scale when AI operates inside the analytics layer, not outside it.

This article showed what production-ready AI dashboards require: intent drives creation, metadata defines structure, existing security enforces access, and reuse prevents sprawl. When these pieces connect, dashboards become durable product assets.

That is the model behind Reveal.

- AI-generated dashboards run inside the product, with full branding control.

- AI works on metadata, preserving your existing security model.

- Users can create, edit, analyze, and reuse dashboards through natural language

- Product teams retain control over branding, UX, and deployment.

For SaaS teams and ISVs, the outcome is practical: users reach answers faster, teams reduce dashboard maintenance, and analytics stays consistent as products grow.

Return to Blog

Return to Blog